文章目录

Toggle背景

OpenAI在3月27日宣布对其 Agent SDK 进行重大更新,正式支持 Model Context Protocol(MCP)服务。由于该项技术对于未来Agent技术演进较为重要,所以本章内容进行了OpenAI Agent SDK使用MCP服务的实战进行了总结。

内容概述

- 通过OpenAI SDK实现一个简单的Agent示例

- 通过MLfow,可视化查看Agent的运行情况

- 通过OpenAI SDK实现一个可以调用MCP的Agent示例

具体内容

1. 创建项目

# 初始化项目

uv init mcp-with-openai-agents

cd mcp-with-openai-agents

# 创建虚拟环境

uv venv

source .venv/bin/activate

# 安装依赖

uv add openai-agents2. 一个简单示例

import asyncio

import logging

from openai import AsyncOpenAI

from agents import Agent, OpenAIChatCompletionsModel, Runner, function_tool, set_tracing_disabled

logging.basicConfig(level=logging.DEBUG)

BASE_URL = "https://api.deepseek.com/v1"

API_KEY = "sk-0d9449d235*******"

MODEL_NAME = "deepseek-chat"

if not BASE_URL or not API_KEY or not MODEL_NAME:

raise ValueError(

"Please set EXAMPLE_BASE_URL, EXAMPLE_API_KEY, EXAMPLE_MODEL_NAME via env var or code."

)

client = AsyncOpenAI(base_url=BASE_URL, api_key=API_KEY)

set_tracing_disabled(disabled=True)

@function_tool

def get_weather(city: str):

logging.info(f"[DEBUG] Getting weather for {city}")

return f"The weather in {city} is sunny."

async def main():

# 此代理将使用自定义的大模型LLM

agent = Agent(

name="Assistant",

instructions="You only respond in haikus.",

model=OpenAIChatCompletionsModel(model=MODEL_NAME, openai_client=client),

tools=[get_weather],

)

result = await Runner.run(agent, "What's the weather in Tokyo?")

print(result.final_output)

if __name__ == "__main__":

asyncio.run(main())

运行以上代码,输出日志之后,我们详细分析日志的组成部分。

2.1 日志分析:初始化部分

DEBUG:openai.agents:Tracing is disabled. Not creating trace Agent workflow

DEBUG:openai.agents:Setting current trace: no-op

DEBUG:openai.agents:Tracing is disabled. Not creating span <agents.tracing.span_data.AgentSpanData object at 0x108bccd70>

DEBUG:openai.agents:Running agent Assistant (turn 1)

DEBUG:openai.agents:Tracing is disabled. Not creating span <agents.tracing.span_data.GenerationSpanData object at 0x108bccfb0>

DEBUG:openai.agents:[

{

"content": "You only respond in haikus.",

"role": "system"

},

{

"role": "user",

"content": "What's the weather in Tokyo?"

}

]

Tools:

[

{

"type": "function",

"function": {

"name": "get_weather",

"description": "",

"parameters": {

"properties": {

"city": {

"title": "City",

"type": "string"

}

},

"required": [

"city"

],

"title": "get_weather_args",

"type": "object",

"additionalProperties": false

}

}

}

]

Stream: False

Tool choice: NOT_GIVEN

Response format: NOT_GIVEN

2.2 日志分析:第一次请求大模型

DEBUG:openai._base_client:Request options: {'method': 'post', 'url': '/chat/completions', 'headers': {'User-Agent': 'Agents/Python 0.0.0'}, 'files': None, 'json_data': {'messages': [{'content': 'You only respond in haikus.', 'role': 'system'}, {'role': 'user', 'content': "What's the weather in Tokyo?"}], 'model': 'deepseek-chat', 'stream': False, 'tools': [{'type': 'function', 'function': {'name': 'get_weather', 'description': '', 'parameters': {'properties': {'city': {'title': 'City', 'type': 'string'}}, 'required': ['city'], 'title': 'get_weather_args', 'type': 'object', 'additionalProperties': False}}}]}}

... 中间部分省略

DEBUG:openai._base_client:HTTP Request: POST https://api.deepseek.com/v1/chat/completions "200 OK"

DEBUG:openai.agents:LLM resp:

{

"content": "",

"refusal": null,

"role": "assistant",

"annotations": null,

"audio": null,

"function_call": null,

"tool_calls": [

{

"id": "call_0_6f04a7e3-acde-4ff1-b925-68c6294f3955",

"function": {

"arguments": "{\"city\":\"Tokyo\"}",

"name": "get_weather"

},

"type": "function",

"index": 0

}

]

}

DEBUG:openai.agents:Tracing is disabled. Not creating span <agents.tracing.span_data.FunctionSpanData object at 0x108c4b290>2.3 日志分析:调用get_weather工具

DEBUG:openai.agents:Invoking tool get_weather with input {"city":"Tokyo"}

DEBUG:openai.agents:Tool call args: ['Tokyo'], kwargs: {}

INFO:root:[DEBUG] Getting weather for Tokyo

DEBUG:openai.agents:Tool get_weather returned The weather in Tokyo is sunny.

DEBUG:openai.agents:Running agent Assistant (turn 2)

DEBUG:openai.agents:Tracing is disabled. Not creating span <agents.tracing.span_data.GenerationSpanData object at 0x108718dd0>2.4 日志分析:第二次请求大模型

DEBUG:openai.agents:[

{

"content": "You only respond in haikus.",

"role": "system"

},

{

"role": "user",

"content": "What's the weather in Tokyo?"

},

{

"role": "assistant",

"tool_calls": [

{

"id": "call_0_6f04a7e3-acde-4ff1-b925-68c6294f3955",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"city\":\"Tokyo\"}"

}

}

]

},

{

"role": "tool",

"tool_call_id": "call_0_6f04a7e3-acde-4ff1-b925-68c6294f3955",

"content": "The weather in Tokyo is sunny."

}

]

Tools:

[

{

"type": "function",

"function": {

"name": "get_weather",

"description": "",

"parameters": {

"properties": {

"city": {

"title": "City",

"type": "string"

}

},

"required": [

"city"

],

"title": "get_weather_args",

"type": "object",

"additionalProperties": false

}

}

}

]

Stream: False

Tool choice: NOT_GIVEN

Response format: NOT_GIVEN

DEBUG:openai._base_client:Request options: {'method': 'post', 'url': '/chat/completions', 'headers': {'User-Agent': 'Agents/Python 0.0.0'}, 'files': None, 'json_data': {'messages': [{'content': 'You only respond in haikus.', 'role': 'system'}, {'role': 'user', 'content': "What's the weather in Tokyo?"}, {'role': 'assistant', 'tool_calls': [{'id': 'call_0_6f04a7e3-acde-4ff1-b925-68c6294f3955', 'type': 'function', 'function': {'name': 'get_weather', 'arguments': '{"city":"Tokyo"}'}}]}, {'role': 'tool', 'tool_call_id': 'call_0_6f04a7e3-acde-4ff1-b925-68c6294f3955', 'content': 'The weather in Tokyo is sunny.'}], 'model': 'deepseek-chat', 'stream': False, 'tools': [{'type': 'function', 'function': {'name': 'get_weather', 'description': '', 'parameters': {'properties': {'city': {'title': 'City', 'type': 'string'}}, 'required': ['city'], 'title': 'get_weather_args', 'type': 'object', 'additionalProperties': False}}}]}}

DEBUG:openai._base_client:HTTP Request: POST https://api.deepseek.com/v1/chat/completions "200 OK"

DEBUG:openai.agents:LLM resp:

{

"content": "Sunshine graces Tokyo, \nWarm and bright, the skies are clear\u2014 \nPerfect day to roam.",

"refusal": null,

"role": "assistant",

"annotations": null,

"audio": null,

"function_call": null,

"tool_calls": null

}2.5 日志分析:输出结果

Sunshine graces Tokyo,

Warm and bright, the skies are clear—

Perfect day to roam.

DEBUG:openai.agents:Shutting down trace provider

DEBUG:openai.agents:Shutting down trace processor <agents.tracing.processors.BatchTraceProcessor object at 0x108304b10>3. 通过mlflow可视化Agent运行情况

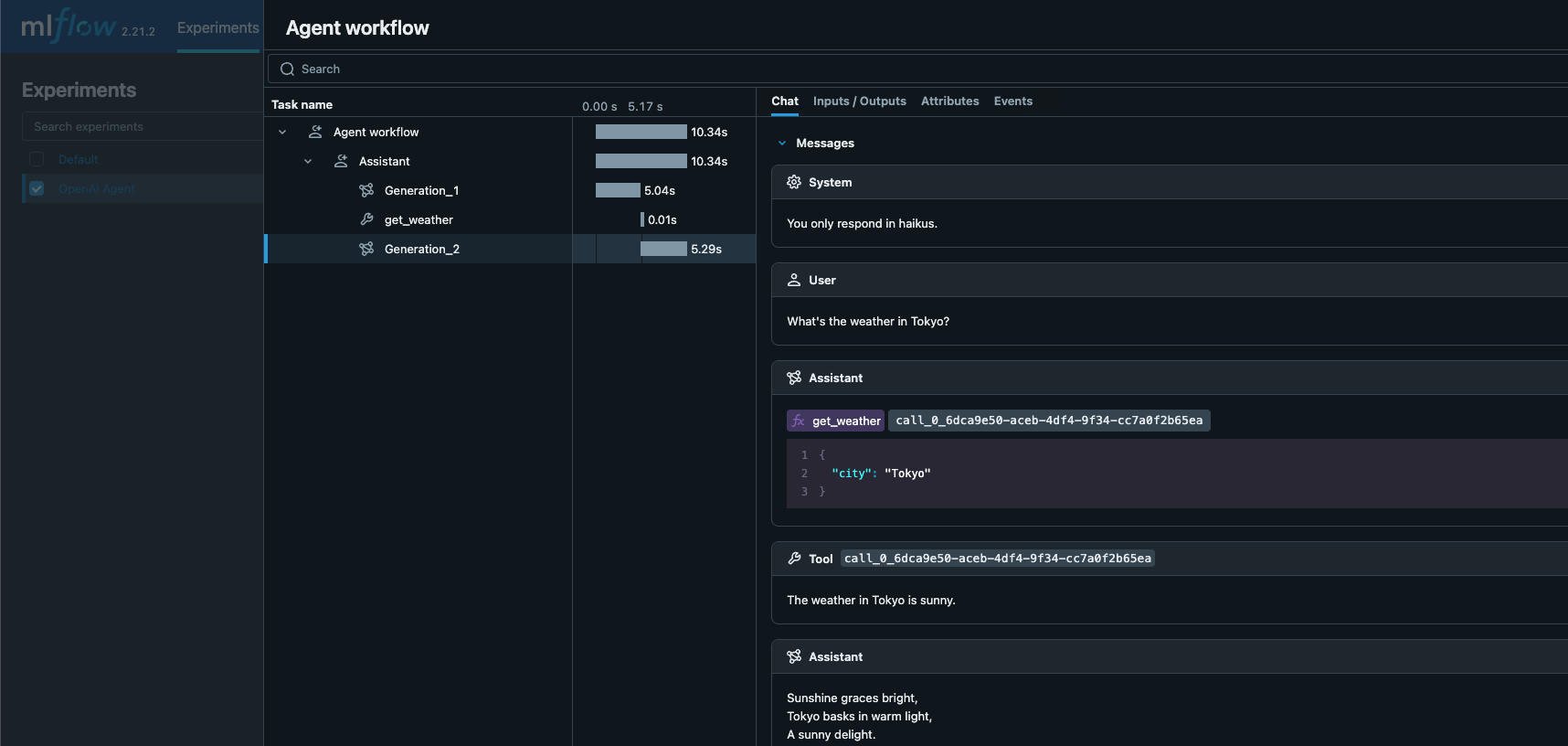

由于上面logging输入的日志阅读起来不便,所以在此插入一款开源工具MLflow,方便可视化的方式查看Agent运行情况。

3.1 MLflow简介

MLflow 是一个开源平台,专为协助机器学习从业者和团队应对机器学习过程中的复杂性而设计。MLflow 关注机器学习项目的整个生命周期,确保每个阶段都易于管理、可追溯且可重现。

官网:https://mlflow.org/docs/latest/

3.2 安装依赖包

pip install mlflow

# 可以通过uv方式安装

# uv add openai-agents3.3 启动服务

安装完毕之后,运行以下命令启动服务

mlflow server --host 127.0.0.1 --port 50003.4 改造Agent代码

在Agent代码中,添加以下代码:

import mlflow

# Enable auto tracing for OpenAI Agents SDK

mlflow.openai.autolog()

# Optional: Set a tracking URI and an experiment

mlflow.set_tracking_uri("http://127.0.0.1:5000")

mlflow.set_experiment("OpenAI Agent")

3.5 重新运行Agent代码

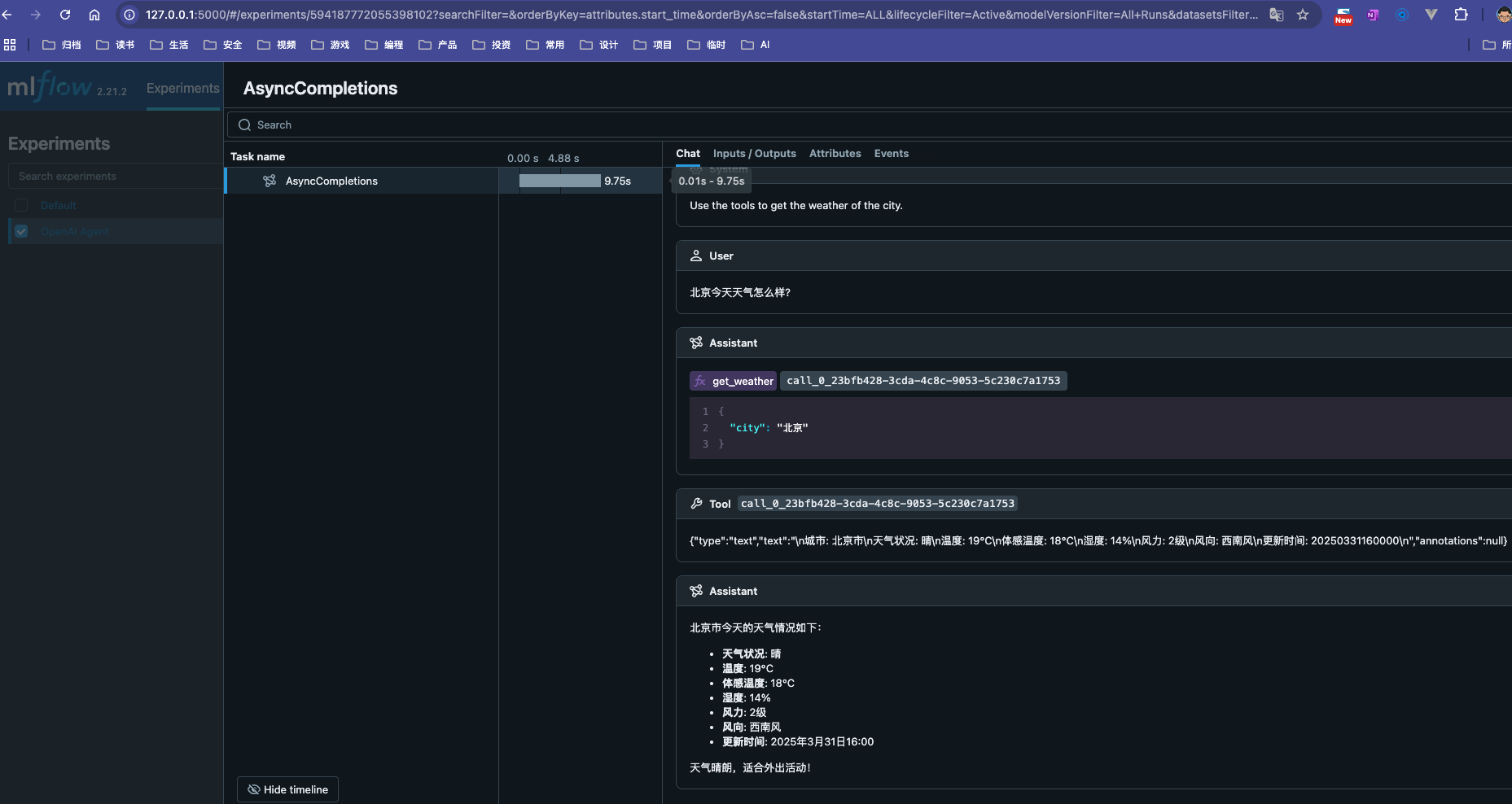

重新运行上述示例中的Agent代码,然后访问http://127.0.0.1:5000,点击对应项目下的traces,即可查看Agent的调用记录。

4. 一个可以调用MCP服务的Agent示例

接下来,我们将基于OpenAI官网提供的案例,尝试通过AgentSDK实现一个可以调用MCP服务的Agent。

4.1 启动mcp-weather-server服务

启动在MCP协议简述至Mcp-server实战中创建的MCP SSE服务。

uv run weather.py备注:可以在cherry-studio中确认该服务已经启动。

4.2 改造Agent的代码

- 改造获取天气的函数。

我们将2.一个简单示例中的 get_weather() 函数替换为下面的代码。

async def run(mcp_server: MCPServer):

# 初始化OpenAI客户端

client = AsyncOpenAI(base_url=BASE_URL, api_key=API_KEY)

set_tracing_disabled(disabled=True)

agent = Agent(

name="Assistant",

instructions="Use the tools to get the weather of the city.",

mcp_servers=[mcp_server],

model=OpenAIChatCompletionsModel(model=MODEL_NAME, openai_client=client),

)

message = "北京今天天气怎么样?"

print(f"Running: {message}")

result = await Runner.run(starting_agent=agent, input=message)

print(result.final_output)- 修改main函数中的代码

async with MCPServerSse( name="weather SSE Server", params={ "url": "http://localhost:8000/sse", }, ) as server: await run(server)

其余部分代码不变,完整代码如下:

import asyncio

import logging

import mlflow

import os

from openai import AsyncOpenAI

from agents import Agent, OpenAIChatCompletionsModel, Runner, set_tracing_disabled

from agents.mcp import MCPServer, MCPServerSse

# 初始化变量

BASE_URL = os.getenv("BASE_URL", "https://api.deepseek.com/v1")

API_KEY = os.getenv("API_KEY", "sk-0d9449d2355a4c63******")

MODEL_NAME = os.getenv("MODEL_NAME", "deepseek-chat")

# 启用OpenAI Agents SDK的自动追踪

mlflow.openai.autolog()

# 设置追踪URI和实验

mlflow.set_tracking_uri("http://127.0.0.1:5000")

mlflow.set_experiment("OpenAI Agent")

async def run(mcp_server: MCPServer):

# 初始化OpenAI客户端

client = AsyncOpenAI(base_url=BASE_URL, api_key=API_KEY)

set_tracing_disabled(disabled=True)

agent = Agent(

name="Assistant",

instructions="Use the tools to get the weather of the city.",

mcp_servers=[mcp_server],

model=OpenAIChatCompletionsModel(model=MODEL_NAME, openai_client=client),

)

message = "北京今天天气怎么样?"

print(f"Running: {message}")

result = await Runner.run(starting_agent=agent, input=message)

print(result.final_output)

async def main():

async with MCPServerSse(

name="weather SSE Server",

params={

"url": "http://localhost:8000/sse",

},

) as server:

await run(server)

if __name__ == "__main__":

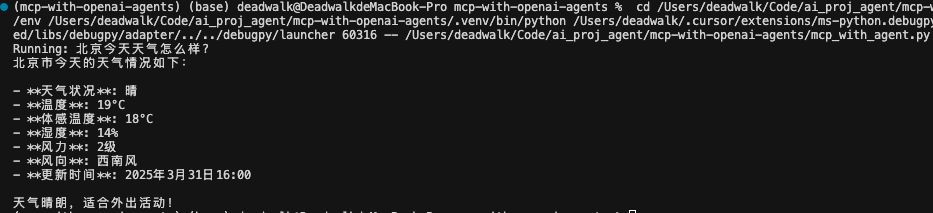

asyncio.run(main())4.3 运行Agent的代码

总结

- 通过

OpenAI的Agent SDK,可以快速实现一个Agent。 - 在

Agent SDK中如果调用非OpenAI的API,可以通过AsyncOpenAI(base_url=BASE_URL, api_key=API_KEY)来指定API地址和API Key。 - 通过开源项目

MLflow,可以记录Agent的运行日志,便于后续分析。 Agent在调用MCP服务(SSE协议)时,可以通过async with MCPServerSse来实现。

参考资料

其他文章

欢迎关注公众号以获得最新的文章和新闻